This article is more than 5 years old.

The University of Washington campus with Mt. Rainier in the distance

The Library Assessment Conference is held biennially and is the largest conference of its kind (with over 600 registrants). This year it is being held in Seattle at the University of Washington. This is my first time attending this particular conference and although I know that Assessment (with a capital A) is an enormous trend in academic libraries these days, it still was surprising to me to find that you could fill a three day conference with 27 “sessions” – each with 3 or more presentations, totalling over 100! And all about assessment! The sessions are divided into tracks (papers, panels and lightning talks) and each of these is themed (collections, methods, collaboration, space, teaching/learning, etc.). The Monday afternoon poster session contained 45 posters in 4 tracks. So it’s been a challenge to pick and choose what would be most valuable to bring back to ZSR (especially when the weather is unseasonably warm and some of the meeting rooms are not air conditioned). I have found the lightning rounds to be particularly useful since those have been typically case studies which give practical how-to’s on their projects. It didn’t take long to settle into the jargon: impact, value, metrics, data visualization, response rate, methodology, analytics, evidence-based decision making… well, you get the idea.

Rather than give a blow by blow, I’d like to highlight some things that caught my attention, link to some tools and articles that might be useful and show a few images from my tour of the library spaces (Research Commons and the newly renovated Odergaard Undergraduate Library). So many things were put out over the three days that I’ll need time to wrap my head around some of them, and this will help me! I have pages of notes so am glad to dig in more deeply if any of the concepts catch your fancy!

Keynote speeches were themed “Change” and here are a few excerpts (Three keynoters – Margie Jantti, Debra Gilcrist, and David Kay)

Jantti

- Be an indispensable partner: demonstrable benefit to the university’s current and aspirational state.

- What data is missing? What don’t we know, even with what data is put before us?

- Active listening is key. We are guilty of pushing forward what WE think is important.

- Students are the lifeblood of university budgets. We need data to better understand library’s impact on student risk and success.

Gilcrist

- Creating a culture of inquiry is a philosophical viewpoint.

- Contextualize everything we do within institutional priorities.

Kay

- Do we use data to get to things that are self-evident?

- Why is data king? Attitude, technology and necessity

- Breakthrough areas: student success (learning analytics/student retention) and research metrics (altmetrics) have seized the moment -their communities have been eloquent in spinning their vision

- Library analytics have remained fuelled by library and library community data, focused on processes (such as collection management) and constrained by application silos (such as ILS or the gate system)

- Question: should we: 1. get whatever data we can and let it tell its story even if we don’t know what that might yet be or 2. collect only data specific to areas of interest or known to be useful?

- Where will external data come from and how can it be woven with library data to tell the story?

- Predicitve analytics will be part of the future of higher ed and libraries need to be involved in the design

Students

One theme I keep hearing about concerns involving students in assessment activities. Several schools have student advisory boards who are active, while other employ student assistants to carry out assessment projects. One example is Virginia Tech which has peer roving assistants who take photos (to document space use), conduct peer interviews, and do weekly seat counts. As one speaker said “If you really want to know how to fix it, talk to the people who are using it.” Peers can be useful for this (our Library Ambassadors?)

Nuggets from various sessions:

- Information literacy takes place outside the library; this means collaboration is required.

- Assessment can be part of daily work, can be integrated, but staff need to feel that additional data collection has meaning and purpose

- Learn to use the same data source to answer short, mid and long term questions

- Don’t wait for perfect data

- Special Collections have metrics mayhem: a lack of standardization of definitions (what constitutes a use (item level, folder level, visit)?

- Everyone presenting on space reports that students rate the biggest need (beyond more outlets) as more quiet study (and at one school, they wanted more tables)

- Move away from assessing things and towards making decisions about things

Tours

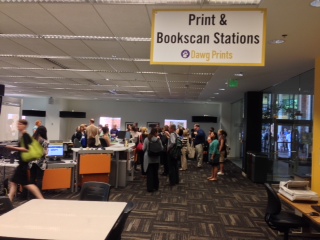

Odegaard Undergraduate Library | 2012-2013 Renovation

Main Floor of the Odegaard Library

Active Learning Classroom (Check out the Setu chairs, just like ours)

Collaborative Writing Center/Research Center (saw 16,000 students in first year!)

Railing Detail (now this is a word cloud)

My favorite sighting during the tour of the Research Commons was how they handled the need for more outlets when they were unable to drill the floors (Special Collections is housed on the floor below):

Tools mentioned by presenters

- Tableau Public (data visualization)

- Ithaka S+R Local Surveys

- Suma: Mobile Assessment Tool

- Balanced Scorecard

- Library Cube (YouTube video)

- Atlas.ti (qualitative data analysis)

- Archival Metrics Toolkit

- Aeon (Special Collections Reporting System)

Interesting Concepts/Links/Readings

- Discovering the Impact of Library Use and Student Performance

- Descriptive v. Predictive Analytics in Higher Education

- Extinction Timeline (Look for Libraries!)

- Value of Libraries for Research and Researchers

- Productive Persistence

I can’t sign off without mentioning the whole issue of recycling, which Seattle has taken to a new level (It was referred to by the Dean of Libraries as the “Land of Obsessive Recycling”). I actually had to study the charts to figure out how to dispose of my lunch leavings. And I wonder who is the poor soul who has to police the choices uninformed visitors make?

5 Comments on ‘Susan at the Library Assessment Conference’

Lots of good stuff in here. Love the Extinction Timeline**

** not to be taken too seriously….a hoot!

Susan, that recycling station reminds me of the one at Whole Foods! (and I really liked the food signage!)

Those outlets are great! They remind me of what we use in the sculpture studio and in woodshop!

Ceiling outlets! I love it! Sounds like it was a useful conference!

I like how electronic database usage was tied to grades in Library Cube. That would be some great data to throw around.

Ingenious ceiling outlets! You might want to add NCSU Libraries’ Suma (http://www.lib.ncsu.edu/dli/projects/spaceassesstool) to your list of tools!