This article is more than 5 years old.

Earlier this week, Rebecca Petersen and I took advantage of the opportunity to attend Exploring Digital Humanities: Practicalities and Potential, a symposium hosted by Atkins Library at UNCC. The day-long event was a collaborative effort between the library and the College of Liberal Arts and Sciences. The morning was devoted to four speakers and the afternoon (which unfortunately we could not stay for) offered hands-on sessions. However, it was well worth the trip to hear the four morning speakers. I am going to primarily focus in on the first speaker, but Robert Morrissey presented about the ARTFL Project and PhiloLogic (a retrieval and analysis tool), Paul Youngman presented “Black Devil and Iron Angel revisited: Using Culturomics in Humanities Research” and UNCC’s Heather McCullough introduced the Library’s new Digital Scholarship Center (which had just been officially launched that day and has a staff of 7, including a usability person, the scholarly communication librarian, a data services/GIS librarian, lab manager and the outreach librarian).

Mark Sample, from George Mason University, started the day off with an introduction to the digital humanities. In the short months that I have been trying to wrap my head around the meaning and purpose of this, Dr. Sample offered the best explanation I’ve yet heard. He jumped right in by asking which is the correct way to ask this question: “What IS digital humanities?” or “What ARE digital humanities?” It was a quick acknowledgement that, not only are people confused about the scope of the field, they don’t even know how to ask about it! Which is right? He says that in practice, the plural is correct, but grammatically, it is singular. ‘Digital humanities’ is a nebulous, made-up term. It describes practices that have been in place for a long time. Originally, it was called ‘humanities computing’; it was a way of talking about using computers to do humanities. The first instance of this goes back to 1949 when Father Robert Busa approached IBM’s Thomas Watson to sponsor his Index Thomisticus, a corpus of the works of Saint Thomas Aquinas. The project lasted 30 years, resulted in a print version, CD-ROM version and is now available via the web. The term ‘digital humanities’ was coined in 2001 by John Unsworth (an LIS Dean at University of Illinos at Urbana-Champaign!) as a marketing approach to the Companion to Digital Humanities. Some thought that this term would describe humanities computing in a more palatable way! Sample gave us an interesting timeline view of how the field developed by showing us a time-lapse evolution of the Digital Humanities Wikipedia page (using the view history) since the first article was written in 2006.

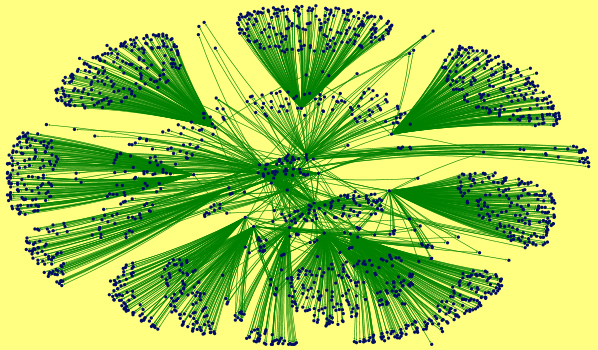

Sample sees the field of digital humanities as encompassing a two-fold endeavor: 1) it allows scholars to approach humanities in new ways using new tools that facilitate production of knowledge, and 2) it allows representation of knowledge. When discussing the Wikipedia articles, he noted that the early versions focused on presentation instead of production. One of the examples of ‘production’ he discussed was the Proceedings of the Old Bailey which is “a fully searchable edition of the largest body of texts detailing the lives of non-elite people ever published, containing 197,745 criminal trials held at London’s central criminal court.” This project was cross discipline and cross institutional. He showed us a dot map of all the trials that have the word ‘killing’ in them. He showed the tracking of the term ‘loveless marriage.’ Another project he presented was the Walt Whitman Project, which he said was both production and representation. In this project every edition of Leaves of Grass is included, allowing researchers to compare across editions. An example of representation is Drama in the Delta, a role playing video game that puts the player in the experience of Arkansas camps where 15,000 Japanese were interned during World War II. It doesn’t produce new knowledge but it is designed to represent knowledge to students in a new way.

Having bodies of searchable text of these magnitudes allow scholars to ask questions that couldn’t have been imaginable if they had to plow through the manuscripts manually. One interesting concept he introduced was the idea of distance reading versus close reading. As opposed to “close reading” of a text, “distant reading” allows researchers to analyze not just one or two books but thousands of them at a time.

I’ve written much more than I usually permit myself in these postings, but want to mention Youngman’s use of pattern analysis using Google’s Ngram Viewer and his recommendation of the Culturomics site that has a corpus of over 5 million words. One thing he stressed about using data from Google books (he calls Google Books a game changer) is that, yes, the data is dirty, but you can still work with it. He says that pattern analysis is what it’s all about and these tools allow a scholar to get a larger perspective by using a shotgun approach to discover patterns. It allows you to spot what perhaps could be a trend but then the key is to collaborate with others to verify real trends.

It was a very productive morning that provided me with many resources to explore as well as a much better understanding of what digital humanities is: from Sample: “Digital humanities are what digital humanists are doing at any given time.”

4 Comments on ‘Digital Humanities Symposium at UNCC’

How fascinating, Susan!! What fun! I’m so glad these conversations are going on locally. It’s such important work.

That Ngram viewer is fascinating. Thanks for the post and the insight into Digital Humanities!

That Old Bailey site is addictive – really a remarkable collection of material. Thanks!

Great write-up, Susan. I found this experience very valuable.